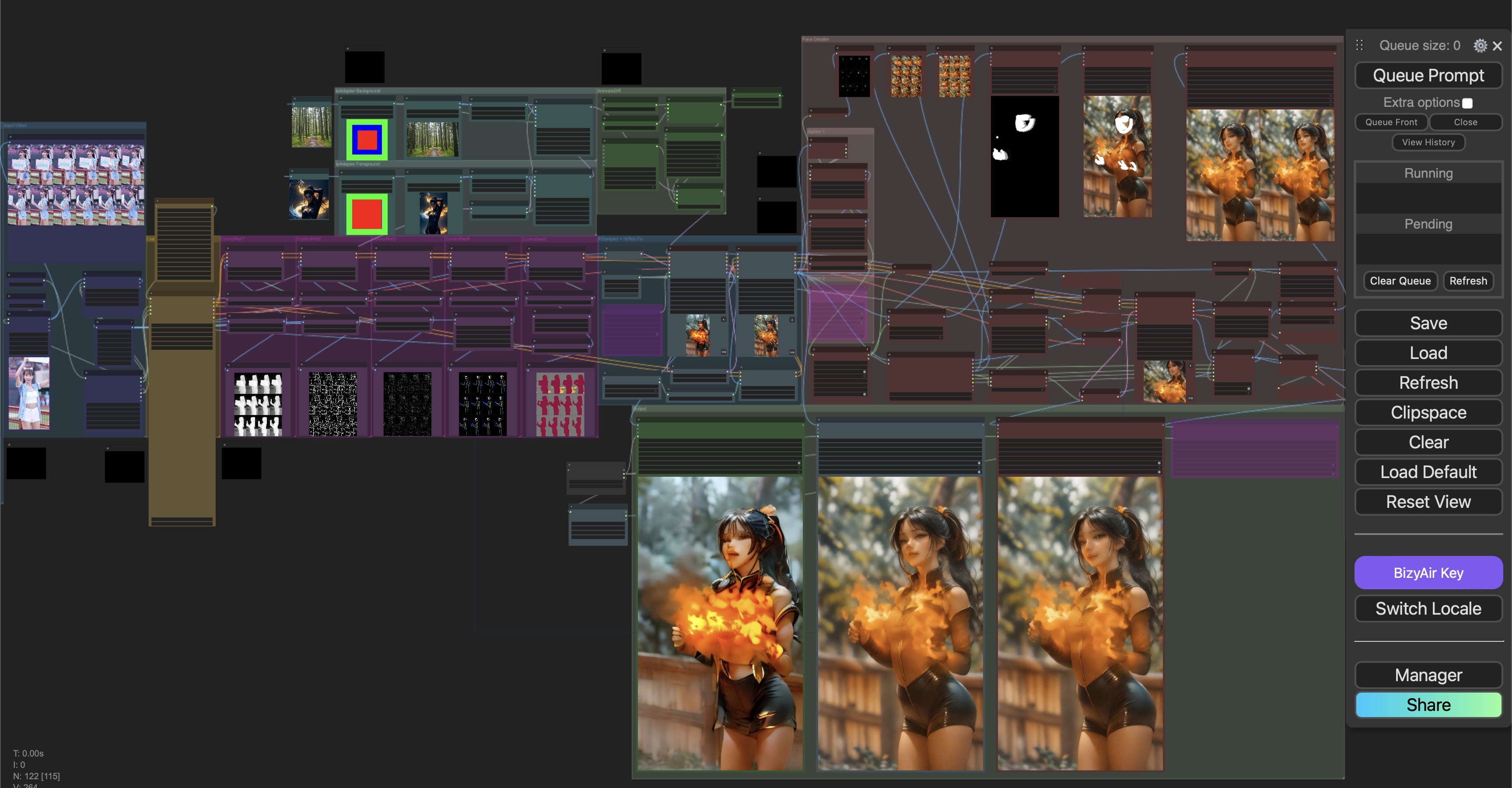

4. Video to Video Animation Workflow

1 Introduction

In this workflow, we transform an original video of a cheerleading girl Origin Video into an anime-style animation of a medieval fantasy woman casting fire magic in a forest. We use ControlNet, Mask, IPAdapter, and AnimateDiff to achieve this transformation. The process involves taking a base video and applying frame-by-frame transformation techniques to enhance and modify the visual elements of the video.

After the animation undergoes one round of upscaling, we utilize the Face Detailer block to refine the character’s facial details by reading the character’s skeleton from the upscaled animation. This allows us to generate a Mask Animation, where we repaint the masked facial areas to achieve a cleaner and more detailed animation.

The complete workflow can be found below for reference and can be imported into ComfyUI for further adjustments.

Download the workflow JSON file

- Casting Spell Animation Output_Face Detailer:

- Comparison: Output Video vs. Original:

2 Workflow Overview

This project is adapted from Author Akumetsu971

- Workflow Setup:

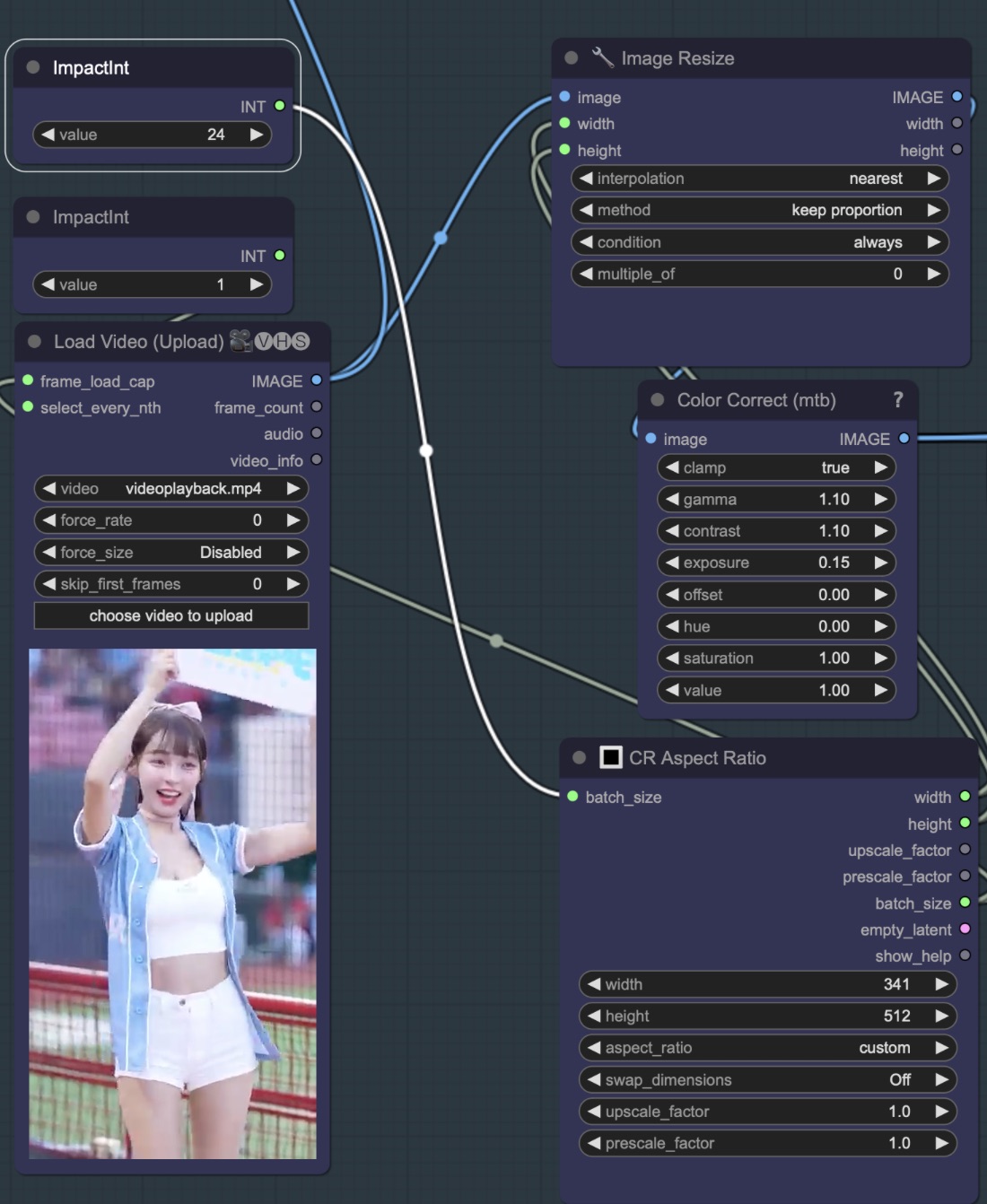

2.1 Step 1: Input Video

First, import the original video and set the positive and negative keywords describing the video you wish to create. In this step, you can also configure the total frame count and how many frames to skip between each one. Since most original videos have a high frame rate, processing this many frames can consume large amounts of memory and time. It’s important to first assess the quality, resolution, and specific areas that require attention to optimize the workload.

- Load Video:

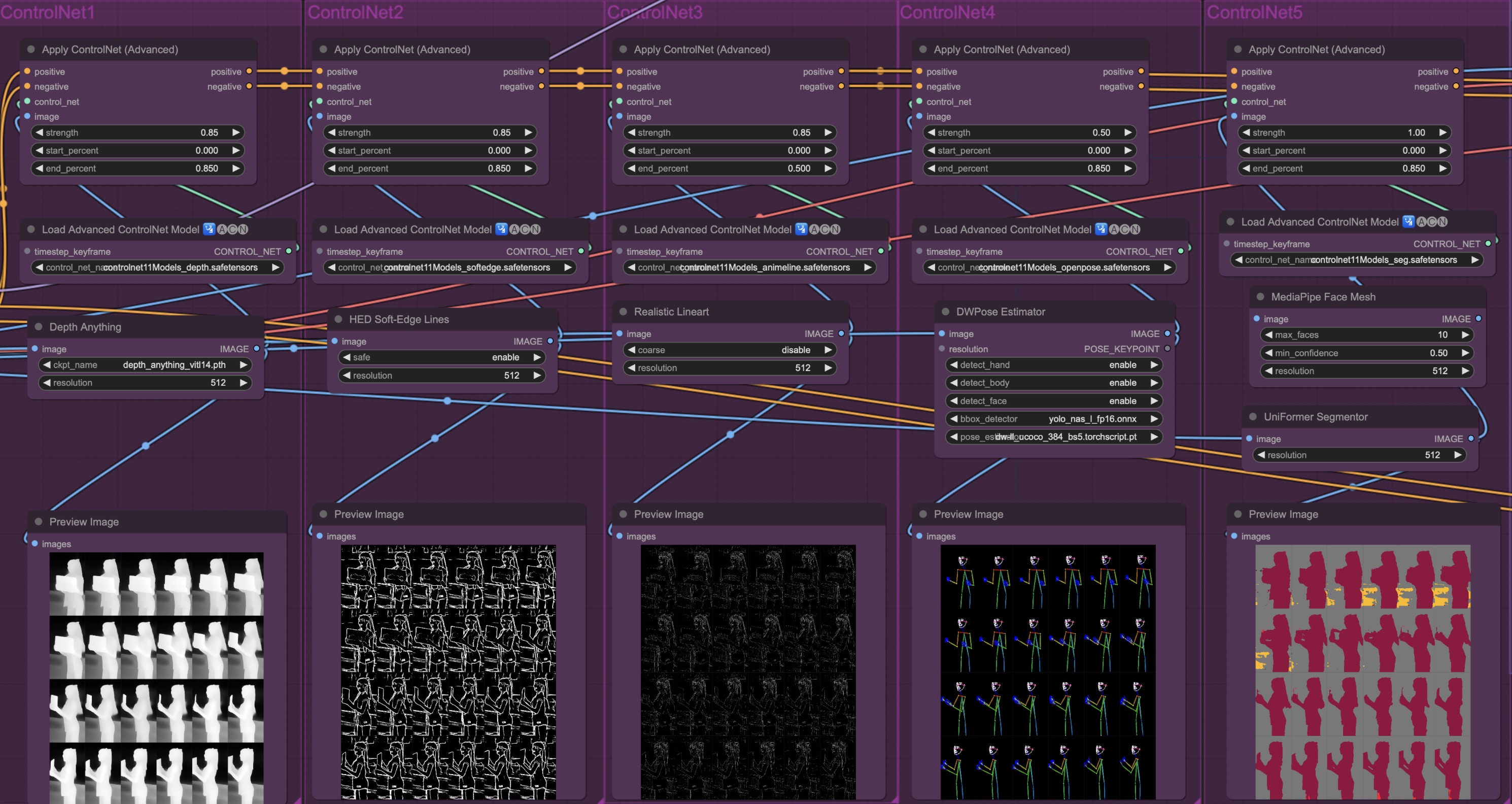

2.2 Step 2: ControlNet Configuration

In this step, we configure ControlNet to manage multiple aspects of the animation, including depth, edge detection, line art, and pose estimation. Each ControlNet model is assigned to a specific task, such as detecting depth or extracting key facial points. By using a combination of models, we can fine-tune the details of the animation and ensure that each element of the video is correctly processed.

When ControlNet is not applied, key details may be lost, leading to less precise and dynamic animations. Below is an example comparing the results of a video without ControlNet configurations.

ControlNet Workflow Setup:

Example video without ControlNet:

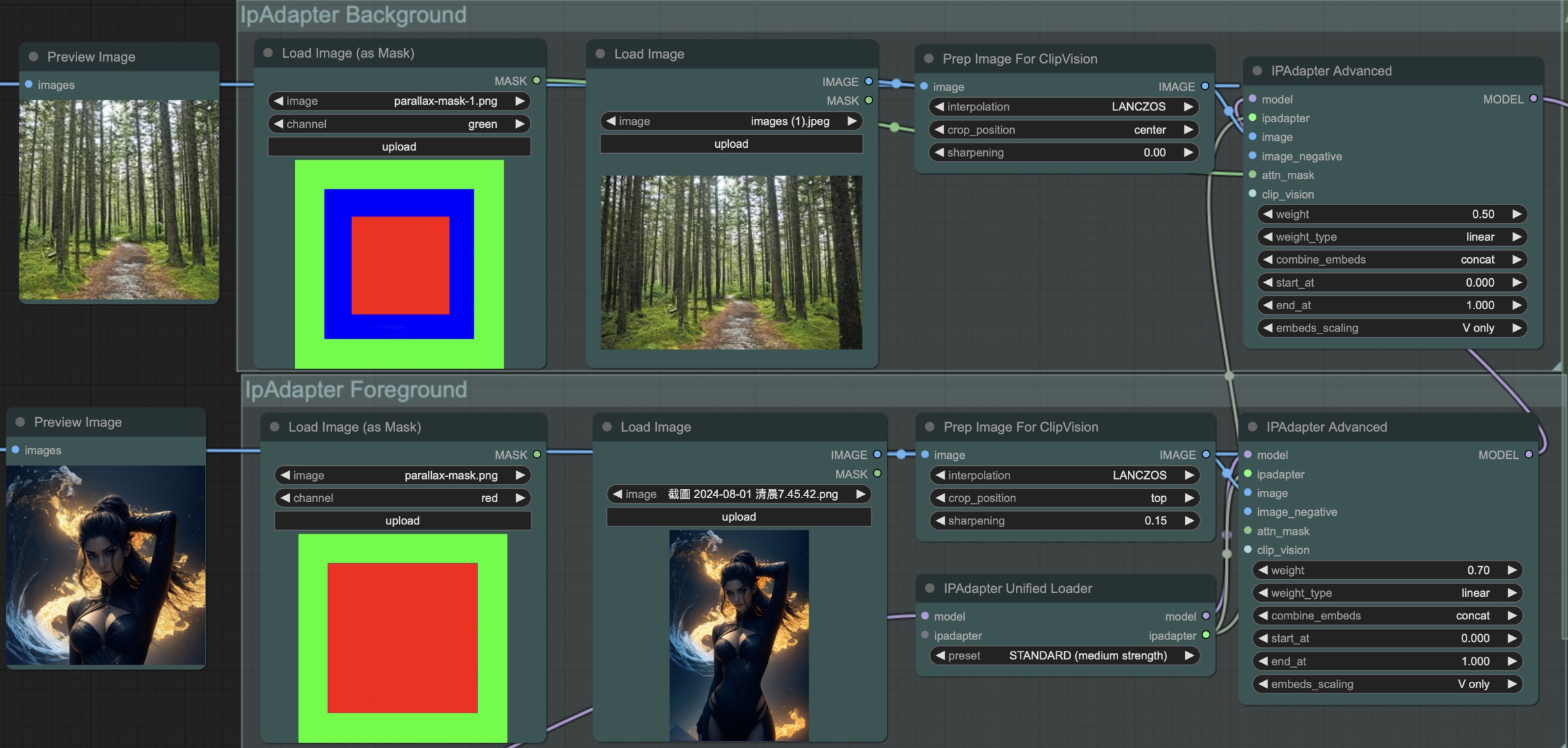

2.3 Step 3: Mask and IPAdapter Background

In this step, we apply the mask concept mentioned in the previous section, filtering elements that are essential for the animation. Simultaneously, we use IPAdapter to define the foreground and background of the scene. In this example, the foreground is a medieval fantasy-style woman, and the background is a forest environment. The Mask allows us to isolate and control these elements more effectively, making it possible to modify only the foreground character or the background independently. By adjusting the weight and timing of these elements through IPAdapter, we create a balanced and dynamic animation where the character interacts with the environment seamlessly.

- Mask and IPAdapter:

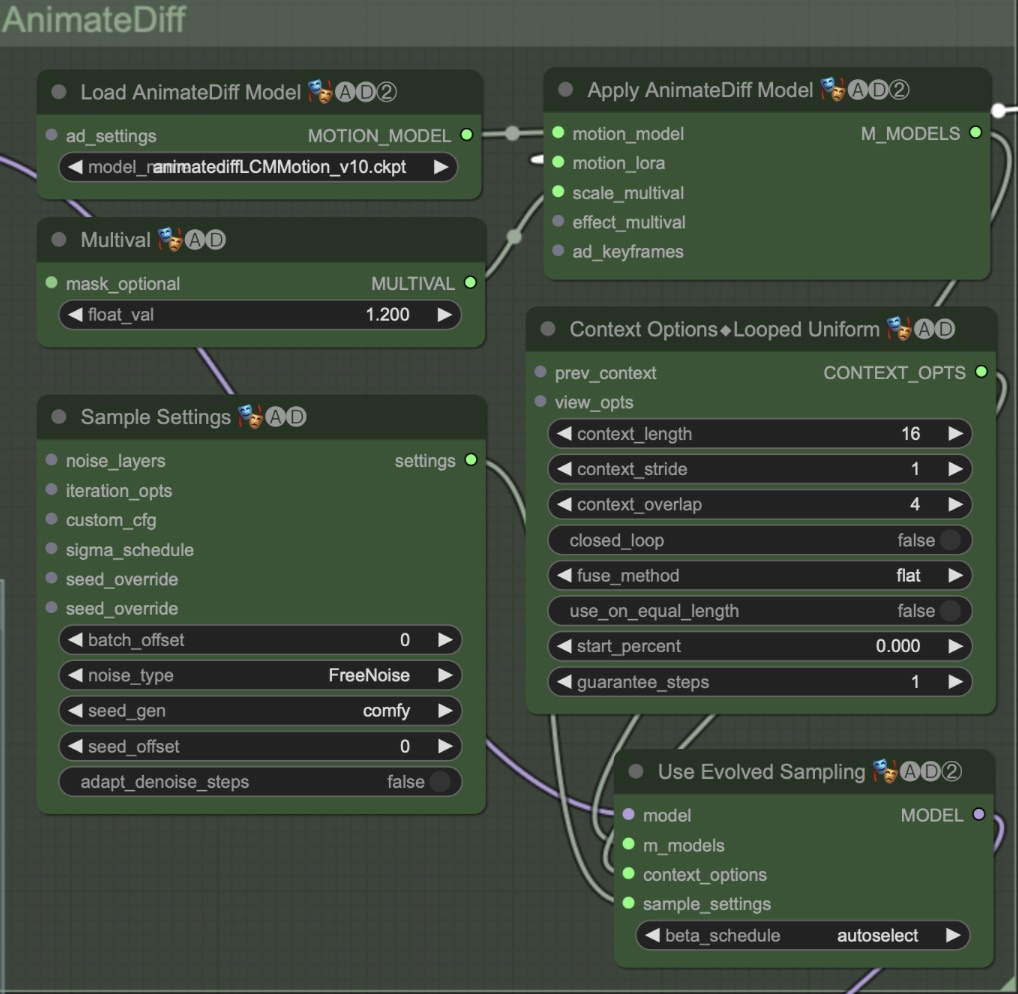

2.4 Step 4: AnimateDiff Application

In this step, we apply AnimateDiff to transform the video frames into a consistent animation style. AnimateDiff is responsible for blending the different elements together, such as the masked foreground and background images, while maintaining smooth transitions between frames. By adjusting the strength of the AnimateDiff model, we can control the overall look of the animation, such as the degree of stylization and the level of detail retained from the original video.

- AnimateDiff:

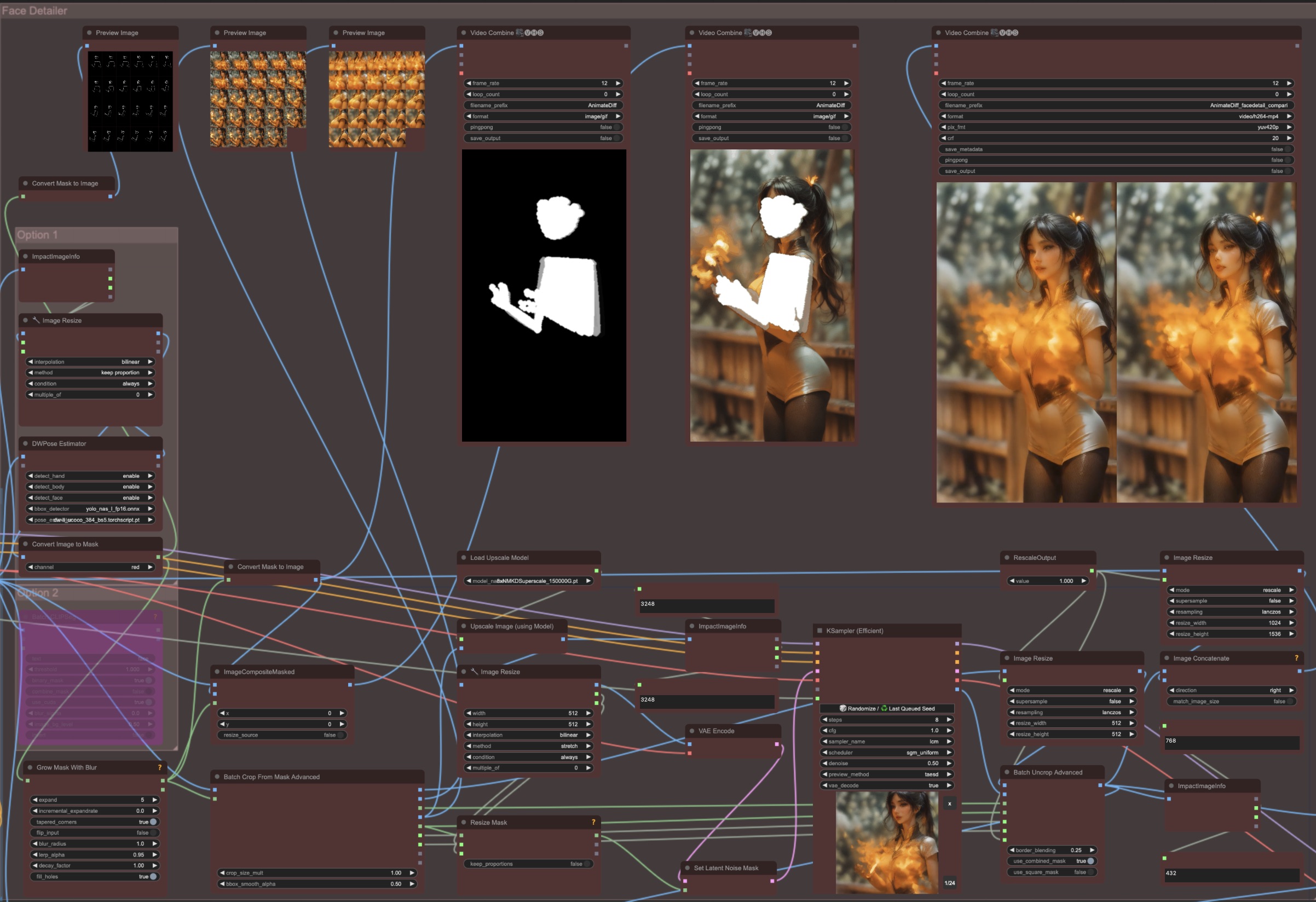

2.5 Step 5: Upscaling and Face Detail

After the initial animation has been generated, we apply Upscaling to improve the overall resolution and quality of the animation. Upscaling allows us to increase the clarity and definition of the details, making the final animation look sharper and more refined.

Once the animation has been upscaled, we use the Face Detailer module to focus specifically on the character’s facial features. This step is crucial to ensure that the face is not blurred or distorted during the upscaling process. The Face Detailer reads the character’s skeleton and key facial points, using them to generate a mask that isolates the face for more detailed rendering. This mask is then used to repaint the facial area with enhanced detail, ensuring a clear and accurate depiction of facial expressions in each frame.

By refining the face and ensuring high-quality results, this step elevates the overall aesthetic of the animation, making the character’s appearance more lifelike and visually appealing.

- Face Detail:

2.6 Step 6: Final Video Combination

Finally, after completing all the previous steps of generating frames, upscaling, and refining facial details, we use the Video Combine node(KSampler) to merge all the frames into a single cohesive video file.

This step ensures that all elements — such as the refined face, detailed background, and overall animation — are synchronized and seamlessly combined into the final animation video.

- Compare result:

3 Conclusion

In this workflow, we successfully transformed a cheerleading video into an anime-style animation of a character casting fire magic, using advanced techniques with ControlNet, IPAdapter, AnimateDiff, and Face Detailer. By leveraging these powerful tools, we were able to fine-tune the animation and enhance key elements like the character’s face, background, and overall animation flow.

The ability to combine masks, adjust motion scales, and use Face Detailer for finer facial features allowed for an impressive level of detail in the final output. Each step, from creating masks to applying the final upscaling, contributed to a smooth and polished result.

With this workflow, users can explore different styles and approaches to video-to-video transformations, experimenting with various control nodes and configurations to fit their unique creative needs.